05/03/2023

Technological Ethnocentrism

it’s time to build equity in tech

My undergrad dissertation centered on maternal and child health. My data was primarily white women, even though Black women are over three times more likely to die in pregnancy, postpartum than white women.

--

Pratap Chikanna is a farmer in Kodigehalli, a village that’s roughly 5 hours by road from Bangaluru. His family has been in the business of growing cardamom for years. Now, Chikanna is old school. Until a few years ago, he would rely on cash for his everyday transactions. But some time in 2018, he decided to go digital and open a bank account with a public sector bank. It was a good decision because now he can use the bank account for things like subsidies from the government that are directly transferred to him.

Kodigehalli, village near Bangalore

But there’s a problem.

Updates from the bank come to him via SMS and Chikanna can’t understand what is in these messages because he does not understand English. So he walks 6 kms to the nearest town of Sakleshpur where he can show these messages to someone who speaks English and can translate that message for him. That trip takes him more than an hour each way. This means he loses time and energy, and half a day’s work – all because he can’t read English.

--

BKS, a retired government employee from Kolkata, has been engaged in the stock market for several years. He shares the common belief that his lack of proficiency in the English language has caused him to miss out on several lucrative opportunities in the market. Corporate announcements, IPO prospectuses, and other critical information are typically released only in English, making it challenging for non-English speakers to access and comprehend the material. As a result, those who are not fluent in English are effectively locked out of the benefits that come with accessing such information.

--

This is problematic because less than 10% of India speaks English. This means that over a BILLION people will, like Chikanna, like BKS, not be able to understand any interactions exclusively in English. As shocking as it is, this is just one way in which language barriers come in the way for people from marginalized communities trying to live a decent and dignified life.

Think about an enthusiastic person from South Sudan trying to learn about the latest developments in Artificial Intelligence, simply calling this a language barrier undersells the problem.

Technological ethnocentrism is not limited to linguistic supremacy.

The unequal distribution of tech-related resources and access can contribute to this phenomenon. For example, if a certain group has less access to technology or the internet, they may be excluded from participating fully in the digital world, which can have negative social and economic consequences.

[separate issue – according to the World Bank, only about 28% of the population in sub-Saharan Africa had access to the internet in 2021]

The tendency to view one’s own culture or ethnic group as superior to others can lead to bias and discrimination against cultures or ethnic groups.

Ethnocentrism can manifest in technology in various ways. For example, the design and development of technology can be influenced by the cultural and social biases of the designers, leading to products that may not be suitable or accessible to people from different cultures or with different backgrounds.

AI algorithms are designed to learn from data and make predictions based on that data. However, if the data that the algorithms learn from is biased, the AI will also be biased. This can lead to AI systems that discriminate against certain groups, including people of color, women, and other marginalized communities.

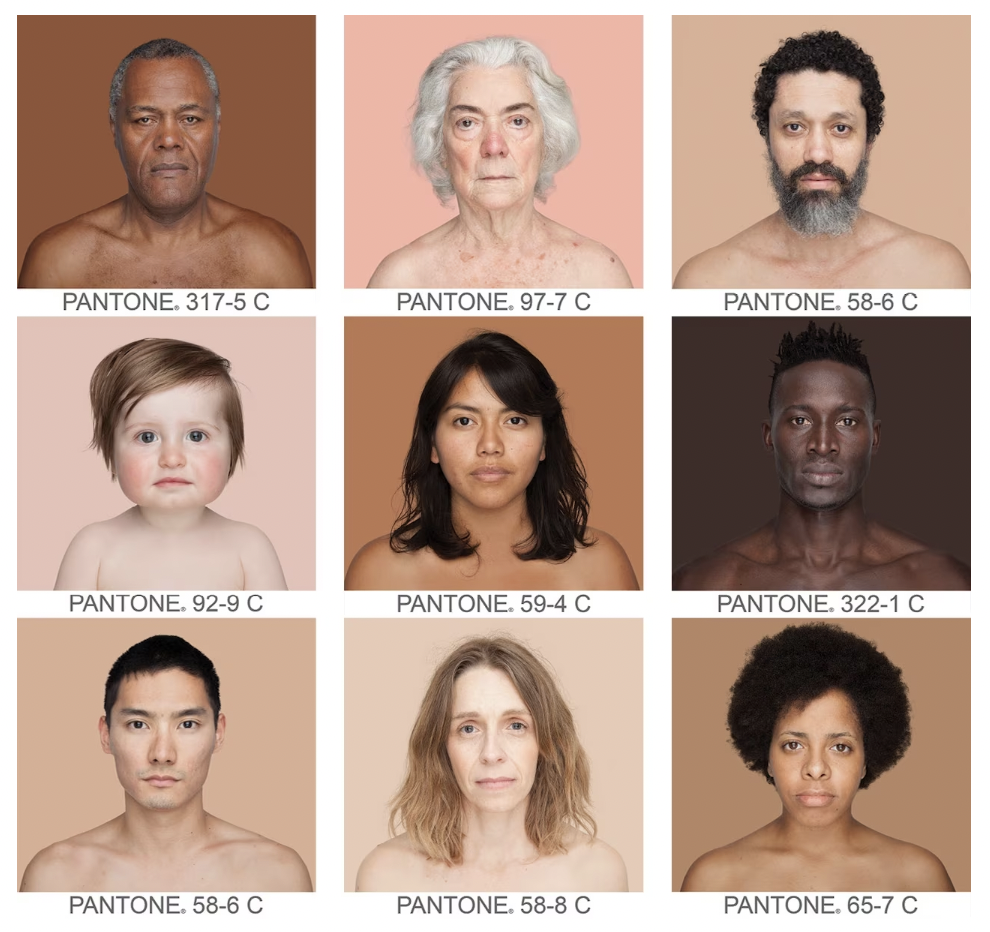

From National Geographic: An Artist Finds True Skin Colors in a Diverse Palette. Photographs by Angelica Dass.

One example of this is facial recognition software. Facial recognition algorithms are designed to identify and categorize human faces based on certain physical features, such as the distance between the eyes, the shape of the nose, and the size of the mouth. However, many of these algorithms have been found to be less accurate when it comes to recognizing faces with ethnic features or darker skin tones – more on this below. The systems use data in the form of images or videos of people’s faces. When the training data used to create the algorithm is not diverse enough, it results in an algorithm that is better at recognizing faces that look like the majority of the training data.

Look at AI-powered hiring systems! These systems use algorithms to sort through resumes and identify the most promising candidates for a job. If the training data for the algorithm is based on previous hiring patterns that were biased, the AI system may replicate that bias and continue to discriminate against certain groups.

This bias can be related to skin color as well, as certain technologies are designed and marketed primarily to people with lighter skin tones, while ignoring or neglecting the needs of people with darker skin. This is because most facial recognition software is trained on datasets that primarily include images of people with lighter skin tones, which can lead to biases in the algorithm that make it less effective for recognizing people with darker skin.

Let's look at another example in biotech – the development of medical devices. Many pulse oximeters, which are used to measure a person's blood oxygen level, have been found to be less accurate when used on people with darker skin tones. This is because these devices use light sensors to detect the level of oxygen in the blood, and the absorption of light can be affected by skin color. However, many pulse oximeters are designed and tested primarily on people with lighter skin tones, which can lead to inaccuracies for people with darker skin.

These examples highlight the need for greater diversity and inclusion in the development and testing of new technologies. By including people with a range of skin tones and cultural backgrounds in the development process, it is possible to create technologies that are more accurate and effective for everyone, regardless of their skin color.

It is important for companies and developers to be aware of potential biases in their technologies and to take steps to mitigate these biases through ongoing testing and evaluation.

It is important to recognize and address these issues to ensure that technology is inclusive and accessible to everyone, regardless of their cultural or ethnic background.

It is important to ensure that the training data used to create these algorithms is diverse and representative of all groups.

It is important to have diverse teams of researchers and engineers working on developing AI systems to ensure that different perspectives and experiences are taken into account.

It is important to conduct ongoing testing and evaluation of AI systems to ensure that they are not discriminating against any particular group.

What can we do? I leave below 10 action items to consider:

Develop Ethical Standards: The development of ethical standards that prioritize fairness, transparency, and accountability in AI technologies is crucial to address the issue of technological ethnocentrism. These standards should consider the impact of AI on different communities and be developed in consultation with diverse stakeholders.

Encourage Open Collaboration: Encouraging open collaboration among different industries, communities, and researchers can help address technological ethnocentrism. Collaboration can facilitate the sharing of best practices and knowledge that can help overcome biases and create more inclusive AI systems.

Implement Bias Detection and Correction Tools: Companies should implement bias detection and correction tools to identify and address biases in their algorithms. These tools can help mitigate the impact of technological ethnocentrism by identifying and correcting potential biases.

Increase Diversity in the Workforce: Increasing diversity in the workforce can help address technological ethnocentrism. Companies should prioritize the hiring of people from diverse backgrounds, including people from underrepresented communities.

Consider Cultural and Linguistic Differences: Developers should consider cultural and linguistic differences when creating AI systems. AI should be designed to work with multiple languages and take into account cultural differences to ensure that everyone can use the technology effectively.

Regular Audit and Review: Regular auditing and review of AI systems can help identify and address any biases that may have emerged over time. This can help to ensure that the technology is always working in an inclusive and fair manner.

Involve Affected Communities: It is essential to involve affected communities in the design and development of AI systems. This can help ensure that AI systems are designed to meet their needs and are culturally sensitive.

Foster a Culture of Transparency: Companies should be transparent about their AI systems and their decision-making processes. This can include providing explanations of how the systems work, as well as how they are trained and evaluated. This transparency can help build trust with users and reduce concerns about bias and discrimination.

Prioritize Education and Awareness: Education and awareness programs can help raise awareness of the potential impact of AI on different communities and help promote understanding of the issues related to technological ethnocentrism. This can include targeted outreach and training programs for underrepresented communities, as well as general awareness campaigns for the broader public.

Encourage Interdisciplinary Collaboration: Encouraging interdisciplinary collaboration among experts in different fields can help address technological ethnocentrism. Collaboration can bring together experts in computer science, social sciences, humanities, and other fields to develop AI systems that are inclusive and equitable. This can lead to a more comprehensive understanding of the potential impacts of AI on different communities and help to create more effective solutions.

It’s time to build equity in tech.

Stories at the top from Microsoft India’s podcast.

This piece is 33/50 from my 50 days of writing series. Subscribe to hear about new posts.